The Science of Split Testing (And Why You Should be A/B Testing)

Split testing (A/B testing) email campaigns is often confusing - we explain what to do, how and why.

The split test, or as it's commonly referred to, the A/B test, is essentially an experiment where two or more variants of a page are shown to users at random, with statistical analysis used to determine which variation performs better. Traditionally, it is used for testing website landing pages as well as email marketing.

In this article, we focus on email marketing and will talk you through best practices for setting up successful A/B testing.

Email marketing is an effective communications medium as far as digital marketing services go, and successful campaigns can be achieved, providing that email campaigns are conducted in a more intelligent way than how they might have historically. This is where A/B Testing has emerged as perhaps the best method of analysis.

Subscribe for marketing insights via email

A/B TESTING: AN OVERVIEW

A/B Testing allows for testing different versions of a single email. Its purpose is to see how small changes to the email might improve (or even decrease) the results of that email. Those small changes can be made to the subject line or even the send time – most elements of an email can be tested.

The most common testing ideas worth thinking about when looking to conduct A/B testing are:

What day of the week gets better open rates?

Does a subject line with an incentive or a teaser work best?

Does including your company name in your subject line increase engagement?

Is it better to use your name as the from name, or your company's name?

Does the time of day a campaign is sent affect the click rate?

Are subscribers more likely to click a linked image or linked text?

Do subscribers prefer a campaign that contains a GIF or one with static images?

One of the issues that we are finding is that, although the idea of split testing makes sense, many people are unsure on how to conduct a test or don’t have access to testing tools. Some don’t know where to start.

Let’s look at this in a little detail...

FIRST, DECIDE WHY YOU ARE TESTING

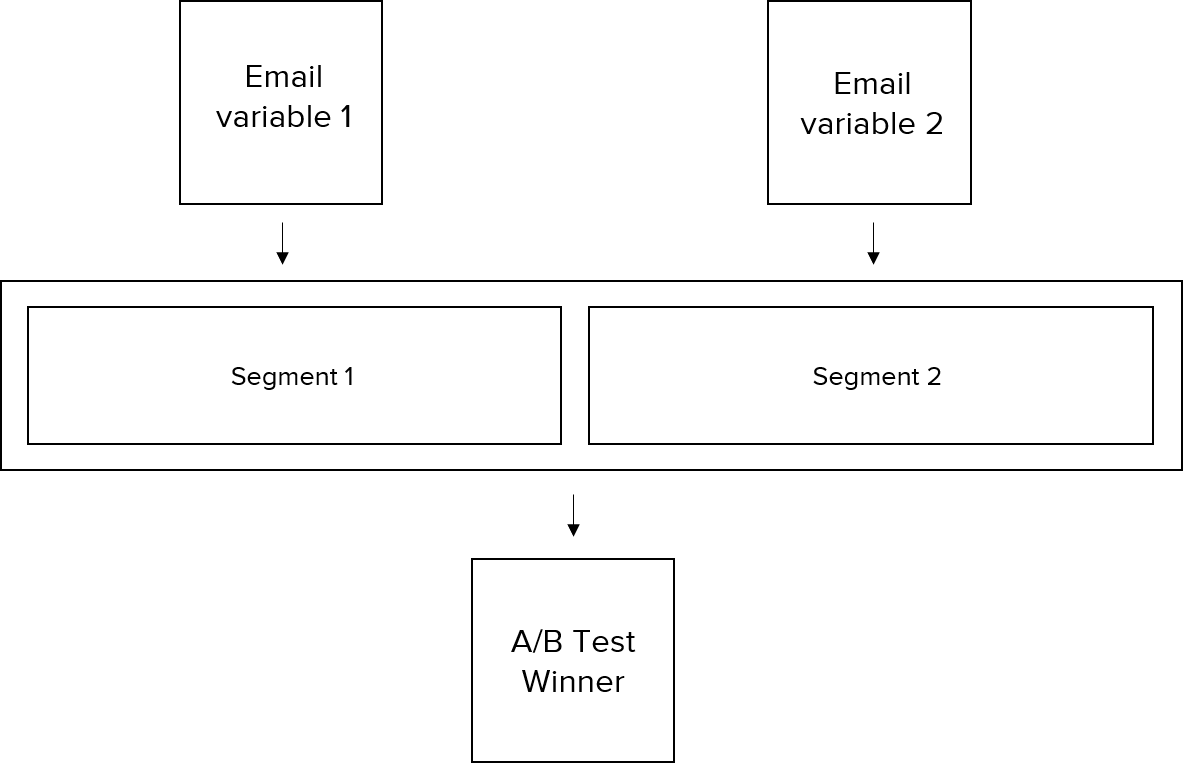

This is the first hurdle, where many will trip up. And that is deciding why you are A/B testing in the first place. There are two basic models for plot testing and both are correct and valid. You need to decide which one is best for you.

MODEL A (50/50)

Quite simply, model A takes your list, splits it in half and sends 50% the control (variable 1) and 50% of the list variable 2.

MODEL B (SAMPLING)

Model B involves sending the variables (1 and 2) to a sample of our audience, usually 20-25%. Looking at which one performed better and then sending out the 'winner' to the remaining 80%. With this sort of test usually happens on a campaign basis, and is most common when conducting regular email campaigns.

THEN, MAKE SURE YOU HAVE ENOUGH EMAIL RECIPIENTS (DATA)

Wanting to optimise your emails for higher performance is great, but if you don’t have enough recipients in your mailing list, the results of the test are not likely to be accurate. Anything under 100 email opens isn’t really going to be enough.

Zetatasphere have created a tool to check if you’re A/B test results are statistically significant, if you go ahead with the test regardless.

SOME BASIC RULES

Ensure both tests are sent out at exactly the same time (to the minute)

Sounds obvious but a gap of even an hour between sends could null your results and render analysis worthwhile.

Only change one aspect of the test variable

Whether it's the subject line, the send details, the creative, the CTA, whatever element it is you want to test, change this and only this. If your version 2 has a different subject line and a different CTA button, maybe in a different location, it will be difficult to analyse the difference. If the control has a higher CTR than version 2, you will not be able to fully understand accurately what drove this.

If you are using method B ensure your sample size is statistically significant

See above and use software such as Zetatasphere.

IF YOU ARE USING A PUBLISHERS LIST (LIST RENTAL) ENSURE THE FOLLOWING:

Ensure they understand what method of salt test you want them to undertake

Some publishers might not have the capability to carry out method B.

Confirm with your publishers what metrics you are looking for

Each publisher has a different mail delivery platform. From MailChimp, Constant Contact, Sendinblue, ActiveCampaign... the list goes on. With mail delivery software there is, as with everything, the good, the bad, and the ugly. Ensure with the publisher that the software can track the metric you are looking for.

For instance, some mail delivery platform cannot report on unique click as against clicks, so understand what it can and cannot deliver before deployment,

Split testing can also be used in an e-commerce context, which can improve sales and decrease CPC - you can find our more in this article on split testing for e-commerce by WakeupData.

PICK ONE ELEMENT OR VARIABLE TO TEST

Once the mailing list or the test sample is ready, for an accurate test, where there is a comparison between two elements, it makes sense for each test to include only one test variable. This way there is no ambiguity in the results.

So, pick one element and then create two different versions of that element only to test. Variables to test include:

Subject line (Length, word choice, emojis)

Sender

Content

Layout and template

Copy (length, tone)

Personalisation elements

Visuals and image style

CTAs (buttons, no buttons)

Position of CTA Buttons (top, top middle bottom, large small)

Offers (no. of offers, links, format)

FINALLY, DECIDE ON THE METRIC BEST SUITED TO THE TEST VARIABLE

OPEN RATE AND CTR

With email marketing, you generally have two metrics that relate to the actual performance of the email itself: Open rate and click-through-rate (CTR). With analysis, there are several options for measurement. If the control variant is the subject line or recipient (changing the details of who the email comes from), the open rate is clearly the primary objective. The open rate is simply the percentage of opens from the mailing list.

The CTR is the percentage of those who clicked on a link within the email, most commonly from the total number of centrepieces of the email, not from the actual opens. Some email providers will calculate the CTR from the opens of the email, but this would be incorrect and the most common way to determine this figure is via the click-to-open rate (CTO). The CTO takes those recipients who opened the email and then determines the click rate.

Unsubscribe rates and reply rates are often measured but are not as common as the first two within A/B tests. The conversion rate once on the website can also be a measure of success, but again, it requires more consideration and involves the landing page as well as the email, which was used to prime those landing page visitors.

CLICKS VS UNIQUE CLICKS

As a rule, unique clicks are better analysis. Try and aim for opens, unique opens as well as clicks and unique clicks. (And don't forget about forwards, another good metric for analysis which is often overlooked.)

SCIENTIFIC EMAIL MARKETING

A/B testing is an essential task for the modern marketer. There really is no right or wrong answers in execution and either method A or method B are credible strategies. With a little careful planning and following the advice in this post, understanding what resonates with your audience and what makes them click could yield some easy wins and large ROI.

Fore more, see another article on B2B email best practices. If you want to have a chat with us at Orientation Marketing about A/B testing or any element of digital marketing. Reach out at any time.